Instalar cluster 3 nodos Kubernetes en LXC Debian 12 Proxmox con Terraform

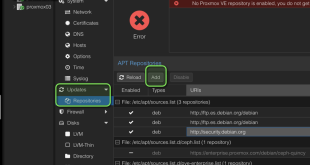

El otro día explicábamos como instalar contenedores LXC a través de Terraform en Proxmox.

Os dejo la entrada:

Terraform: Crear contenedores LXC en Proxmox

Vamos a aprovechar esa entrada para generar un clúster de Kubernetes bajo contenedores LXC en Proxmox y les pasamos los parámetros bajo script para poder realizar toda la parametrización.

El clúster estará compuesto por:

- Nodo Master y 2 nodos Workers

- Debian 12 LXC

- Voy a hacer la entrada con k8s pero podríamos hacerlo con k3s y simplificarla, pero siempre es más divertido buscar solución a la solución compleja.

Vamos al procedimiento…

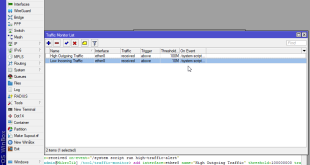

Requerimientos del host Proxmox

Para los que sois nuevos, os pongo los requerimientos del host:

- Comprobar que el módulo está cargado:

1cat /proc/sys/net/bridge/bridge-nf-call-iptables - Deshabilitamos swap:

12sysctl vm.swappiness=0swapoff -a

- Habilitamos net forwarding:

12echo 'net.ipv4.ip_forward=1' >> /etc/sysctl.confsysctl --system

Crear cluster Kubernetes con Terraform

Generamos las keys para gestionar los contenedores, vía web o vía comando:

|

1 |

ssh-keygen -t rsa -b 4096 -f ~/proxmox-kubernetes/ssh-keys/id_rsa -C "k8s-admin@negu.local" |

Dejo los datos para que entendáis los ficheros de configuración:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 |

##### MASTER ###### Public key: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDJJlqRT1bgVT4cyR0ZNz6pC6oYsXWVt/91cPxZrxcrQp4aCUTWplfzRDiiZpvipuaA7hI5XO/1nDauLxFlJw2rMEdKn+eiuFzF3kBHjujYATo6LIKT+bD5IuuLrgFoR6Xj3sSUCU3kri8l61knW+z4GvEFKUN3dvOa3evYoglt4w== Private key (PEM) -----BEGIN RSA PRIVATE KEY----- MIICXAIBAAKBgQDJJlqRT1bgVT4cyR0ZNz6pC6oYsXWVt/91cPxZrxcrQp4aCUTW plfzRDiiZpvipuaA7hI5XO/1nDauLxFlJw2rMEdKn+eiuFzF3kBHjujYATo6LIKT +bD5IuuLrgFoR6Xj3sSUCU3kri8l61knW+z4GvEFKUN3dvOa3evYoglt4wIDAQAB AoGAHwxwhmV8v3vo7oCMoUvJvEY0p0MdJ1MTd4lNKnrAVMkfpl0v5wIeKUqqg0bb YQzqH5Sf84LI91x5hEF3qelxTmk/fIWj2+zVKYXPwGWWHtYC47RZUt7yKXI8rIwA 6JebWrRqH6zZiJYPDHlJU9wEqz2bpugk6BjZNYCCNszyq4ECQQDeTY6vNj//I7eF Tx2+rVncZNU4CEr9d2F+o7xJOcPKrZCg/CcnCzgmjiy8PwBsuKYTJn1kbbKzgme2 p1SAmmsTAkEA56PzfBI4FwgauRMEoTMuedHO2z8Wd33o6npAWDDm+v9aO1Uge/3n wdfGDnY9EZuW6FaDOAjhq2srAwk3HnX78QJAGllnCC2N+FfrcMmn5On3NMBe5X1W JiT4UWJm9ub55hQciHhay63wweEoPEfbbQeV578wLa8y90QFtwiuY2/qMQJBANBg WWl0TkbRwJBavmBw1U864RWz0/ccKgm8feOX4kFKspYLRxSjXPewrndWACu1xnQt Vw9yVefJmUC66n2Zb1ECQFECY4sjdKQfxOZtTz24Gik1EnriCBUr5IPDrHk5d7yy 9HrFDd3ROoF9qNHLqgRXqAL6KTeEmddIhFvHUIS35b4= -----END RSA PRIVATE KEY----- ###### WORKER 1 ####### Public key: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQC2z2xAds9bKacV2Duehc+rS4GLpNcxuiSjlBn+zzkIiYbLcit9pwoSpNq7nLa8UH2ebXnbOX0+p4dPsIXpvYk+5iWTI1a94v0Lgk/16FkiRmUwtazTvgwlYMmKzbVUl8Zy6ZLF7LKUCFYrN+4sdIHGBHNo9/A0Fd49MLdGH7/X1Q== Private key (PEM) -----BEGIN RSA PRIVATE KEY----- MIICWwIBAAKBgQC2z2xAds9bKacV2Duehc+rS4GLpNcxuiSjlBn+zzkIiYbLcit9 pwoSpNq7nLa8UH2ebXnbOX0+p4dPsIXpvYk+5iWTI1a94v0Lgk/16FkiRmUwtazT vgwlYMmKzbVUl8Zy6ZLF7LKUCFYrN+4sdIHGBHNo9/A0Fd49MLdGH7/X1QIDAQAB AoGAEQ0fguhyETCSkfeKzA+dc1PwyovUD00ro17/iQ8JZKdLPYkHJHUNpqKIzRdi ILDTSWaFsgcr4Z8HqyJYbe5iBs/nmomvgaK4Qz23oQS+NPP0uuk/1+EBt4jcRq21 Y2XWLRv2tiogDwUxUFbZV8vnCCXAQ2mf/K6ypqol4lsrDmsCQQC56eCL7suN/0BA ISBeR6xCEGaeQp5643eA8QBa/7rn26R1NcC1eDI1QiXqpEvLOZiBZR51LyVJNjGU xE9CE38TAkEA+7oMnpDFs0lU4Y8wJm9zUN1Vt56mN3FUqy1GSlzwFNJi4A5WjwvS B3Dmdw/1rJ5XiiwKEED+GlXOubH19pvidwJAI4WmW9ZSml0M/7PUpW74YN8VXGPK OBzCNqbVtI1sPuTetW6B1aqTnU14RS8DNF9a3k5d1XIeo7BxJMWlhzCGdQJAF1xi w2xoDIVnrS8eptJ8/yorREki385SrzgaZ9hMJ4KGzohGHCxap3ogyTff8s8XDKmd nXiKnGMONkl/rA03bQJAdPamV1KqT8zkLwBgGYrnO/JwpSubR8CryRFiui5RuwNS CkEuFnYllo9fFwJri/0Cku/yH3dTGae8XmEEAPUsJg== -----END RSA PRIVATE KEY----- ####### WORKER 2 ####### Public key: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDB9CN2X7jsRl8oqE9evUVCB1Vn7KDhbEqzlFBn1G9iD2LJ76ZeYQXzEJEwrBJUl7lPvTup+YNX3qIGWergswNak6+HTXi/I74ePA2THAEnbkQrtneaoCHCO6G+2MnEcmfF1CSIrqdjPxZK4fMgjcurILYk7ruJX61Ay6aHYGlkUw== Private key (PEM) -----BEGIN RSA PRIVATE KEY----- MIICXQIBAAKBgQDB9CN2X7jsRl8oqE9evUVCB1Vn7KDhbEqzlFBn1G9iD2LJ76Ze YQXzEJEwrBJUl7lPvTup+YNX3qIGWergswNak6+HTXi/I74ePA2THAEnbkQrtnea oCHCO6G+2MnEcmfF1CSIrqdjPxZK4fMgjcurILYk7ruJX61Ay6aHYGlkUwIDAQAB AoGAK7NlHCJkScRvY3tM6uVNihuZ6EEeXLxoNn84ALUSZa9ezG41d7V3wDUe6a8T sEDBbdCO9XT1XaKZskGnVPqwyd7kzM2sggFqxDrI8vGUvh9jBYuByDiDwvapoehJ 1hDcFB8xaKhxcold9LdpcSAdmXra/cJS5UPhiQmr7RfG87kCQQDZtAcd4JZucbZE fyyxSzJl6IEP12mBbLaBwl7K02mxKhBc6lkhVK4zYqA2/Ry2g9baWW0rUDFHkEgl pk2bWRBrAkEA5BKUtnwNqhyW4zD+2QvCxZNB5PQfbnkSB3pYZt5YjvH7sPvCa80R oER1wk1JV5WboOTivDRiwFmz3LT7AgtVuQJAeXP4LHDpO8BwoRIaCucavMPTjNTu ZWgTAZ1AaQM9Cbuf2VZcVz342W4CV+spo6E1sicFwo5Aj94sgeSfkzVC9QJBANO0 s7pYmM6JTz6A4m9SzW9c69O9D9gaJjQuyxRh3E6ELJ/yclxitLPSGIVN/ICCbT4C eL0+21O1cJG0pTMWlnECQQC6+KOfTJ29gqVZtkQWqVEXt38KxiebcI2INCZFgCxp wLTIGH+qKFdnfSSGwdPN+xTckybcBsOrPYuLEIf6BPA9 -----END RSA PRIVATE KEY----- |

Creamos fichero para llamar a provider y poder conectar con API Proxmox providers.tf

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# DEFINIMOS EL PROVEEDOR Y LA VERSION terraform { required_providers { proxmox = { source = "telmate/proxmox" version = "2.9.14" } } } # DEFINIMOS LOS PARAMETROS QUE NECESITA EL PROVEEDOR MEDIANTE VARIABLES provider "proxmox" { pm_api_url = var.pve_host pm_user = var.pve_user pm_password = var.pve_pass pm_tls_insecure = true # AGREGAMOS LOG PARA TENER DATOS DEL PROCESO pm_log_enable = true pm_log_file = "terraform-kubernetes-proxmox.log" pm_debug = true pm_log_levels = { _default = "debug" _capturelog = "" } } |

Creamos fichero variables vars.tf:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 |

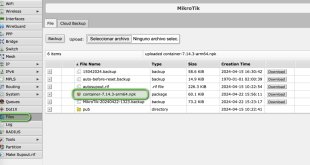

# DATOS SERVIDOR PROXMOX variable "pve_host" { description = "Servidor Proxmox Minis2" default = "https://minis.negu.local:8006/api2/json" } # USUARIO CONEXION variable "pve_user" { description = "Usuario Proxmox" type = string sensitive = true default = "root@pam" } # CONTRASEÑA PARA CONEXION USUARIO PROXMOX variable "pve_pass" { description = "Proxmox password" type = string sensitive = true default = "ElblogdeneguRootPassword" } # CONTRASEÑA DEL USUARIO LINUX ROOT DEL CONTENEDOR LXC variable "ct_pass" { description = "Container password" type = string sensitive = true default = "Elblogdenegu*123" } # NOMBRE DEL CONTENEDOR LXC variable "hostname0" { default = "KUBERNETES00" } # NOMBRE DEL CONTENEDOR LXC variable "hostname1" { default = "KUBERNETES01" } # NOMBRE DEL CONTENEDOR LXC variable "hostname2" { default = "KUBERNETES02" } # SERVIDOR DNS variable "dns_server" { default = "192.168.2.69" } # DOMINIO variable "dns_domain" { default = "negu.local" } # DATOS SSH-KEY0 variable "ssh_key0" { default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDJJlqRT1bgVT4cyR0ZNz6pC6oYsXWVt/91cPxZrxcrQp4aCUTWplfzRDiiZpvipuaA7hI5XO/1nDauLxFlJw2rMEdKn+eiuFzF3kBHjujYATo6LIKT+bD5IuuLrgFoR6Xj3sSUCU3kri8l61knW+z4GvEFKUN3dvOa3evYoglt4w==" } # DATOS SSH-KEY1 variable "ssh_key1" { default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQC2z2xAds9bKacV2Duehc+rS4GLpNcxuiSjlBn+zzkIiYbLcit9pwoSpNq7nLa8UH2ebXnbOX0+p4dPsIXpvYk+5iWTI1a94v0Lgk/16FkiRmUwtazTvgwlYMmKzbVUl8Zy6ZLF7LKUCFYrN+4sdIHGBHNo9/A0Fd49MLdGH7/X1Q==" } # DATOS SSH-KEY2 variable "ssh_key2" { default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDB9CN2X7jsRl8oqE9evUVCB1Vn7KDhbEqzlFBn1G9iD2LJ76ZeYQXzEJEwrBJUl7lPvTup+YNX3qIGWergswNak6+HTXi/I74ePA2THAEnbkQrtneaoCHCO6G+2MnEcmfF1CSIrqdjPxZK4fMgjcurILYk7ruJX61Ay6aHYGlkUw==" } # PLANTILLA CONTENEDOR LXC QUE USAREMOS variable "template_name" { default = "UNRAID:vztmpl/debian-12-standard_12.2-1_amd64.tar.zst" } |

Definimos main.tf con las características de cada máquina, acordaros que para que corran contenedores en las máquinas deberemos habilitar las feautures “nesting” y “keyctl”:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 |

# RECURSOS DEL CONTENEDOR LXC KUBERNETES00 resource "proxmox_lxc" "KUBERNETES00" { count = 1 onboot = true start = true vmid = "201" hostname = var.hostname0 ostype = "debian" cores = 2 cpulimit = 0 memory = 1024 swap = 512 ostemplate = var.template_name password = var.ct_pass pool = "" ssh_public_keys = var.ssh_key0 rootfs { storage= "UNRAID" size="10G" } nameserver = var.dns_server searchdomain = var.dns_domain network { name = "eth0" bridge = "vmbr0" ip = "192.168.2.16/24" gw = "192.168.2.69" firewall = false } features { nesting = true keyctl = true } unprivileged = false target_node = "minis" connection { type = "ssh" user = "root" private_key = file(pathexpand("/TERRAFORM/KUBERNETES-CLUSTER/.ssh/id_rsa0")) host = "192.168.2.16" } provisioner "file" { source = "config/script0.sh" destination = "/tmp/script0.sh" } provisioner "remote-exec" { inline = [ "chmod +x /tmp/script0.sh", "/tmp/script0.sh args", ] on_failure = continue } } # RECURSOS DEL CONTENEDOR LXC KUBERNETES01 resource "proxmox_lxc" "KUBERNETES01" { count = 1 onboot = true start = true vmid = "202" hostname = var.hostname1 ostype = "debian" cores = 2 cpulimit = 0 memory = 1024 swap = 512 ostemplate = var.template_name password = var.ct_pass pool = "" ssh_public_keys = var.ssh_key1 rootfs { storage= "UNRAID" size="10G" } nameserver = var.dns_server searchdomain = var.dns_domain network { name = "eth0" bridge = "vmbr0" ip = "192.168.2.17/24" gw = "192.168.2.69" firewall = false } features { nesting = true keyctl = true } unprivileged = false target_node = "minis" connection { type = "ssh" user = "root" private_key = file(pathexpand("/TERRAFORM/KUBERNETES-CLUSTER/.ssh/id_rsa1")) host = "192.168.2.17" } provisioner "file" { source = "config/script1.sh" destination = "/tmp/script1.sh" } provisioner "remote-exec" { inline = [ "chmod +x /tmp/script1.sh", "/tmp/script1.sh args", ] on_failure = continue } } # RECURSOS DEL CONTENEDOR LXC KUBERNETES02 resource "proxmox_lxc" "KUBERNETES02" { count = 1 onboot = true start = true vmid = "203" hostname = var.hostname2 ostype = "debian" cores = 2 cpulimit = 0 memory = 1024 swap = 512 ostemplate = var.template_name password = var.ct_pass pool = "" ssh_public_keys = var.ssh_key2 rootfs { storage= "UNRAID" size="10G" } nameserver = var.dns_server searchdomain = var.dns_domain network { name = "eth0" bridge = "vmbr0" ip = "192.168.2.18/24" gw = "192.168.2.69" firewall = false } features { nesting = true keyctl = true } unprivileged = false target_node = "minis" connection { type = "ssh" user = "root" private_key = file(pathexpand("/TERRAFORM/KUBERNETES-CLUSTER/.ssh/id_rsa2")) host = "192.168.2.18" } provisioner "file" { source = "config/script2.sh" destination = "/tmp/script2.sh" } provisioner "remote-exec" { inline = [ "chmod +x /tmp/script2.sh", "/tmp/script2.sh args", ] on_failure = continue } } |

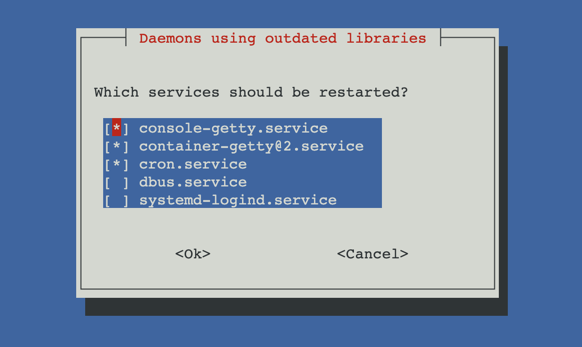

Podemos utilizar como scripts post-instalación algo parecido a esto (adaptarlo a vuestras necesidades). Yo coloco el parámetro “–allow-change-held-packages” porque si no el proceso falla al ser una pantalla interactiva:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

#!/bin/bash # ACTUALIZAR SISTEMA apt update apt upgrade -y # HOSTNAME hostnamectl set-hostname "kubernetes00.negu.local" #hostnamectl set-hostname "kubernetes01.negu.local" #hostnamectl set-hostname "kubernetes02.negu.local" echo '192.168.2.16 kubernetes00.negu.local kubernetes00' >> /etc/hosts echo '192.168.2.17 kubernetes01.negu.local kubernetes01' >> /etc/hosts echo '192.168.2.18 kubernetes02.negu.local kubernetes02' >> /etc/hosts # INSTALAR DOCKER y DEPENDENCIAS apt install --allow-change-held-packages -y apt-transport-https ca-certificates gpg software-properties-common docker.io curl ufw -y # HABILITAR SERVICIO DOCKER systemctl start docker systemctl enable docker # CONFIGURAR FIREWALL MASTER systemctl start ufw ufw enable ufw allow 6443/tcp ufw allow 2379/tcp ufw allow 2380/tcp ufw allow 10250/tcp ufw allow 10251/tcp ufw allow 10252/tcp ufw allow 10255/tcp ufw reload # FIREWALL NODOS # systemctl start ufw # ufc enable # ufw allow 10250/tcp # ufw allow 30000:32767/tcp # ufw reload # OPCIONAL DESHABILITAR FW # iptables -F # iptables -X # iptables -P INPUT ACCEPT # iptables -P OUTPUT ACCEPT # iptables -P FORWARD ACCEPT # DESHABILITAMOS SWAP swapoff –a sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab # INSTALAR KUBERNETES curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key | gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /' | tee /etc/apt/sources.list.d/kubernetes.list apt update apt install --allow-change-held-packages -y kubectl kubelet kubeadm systemctl enable kubelet systemctl start kubelet # REINICIAMOS SISTEMA reboot |

Os dejo el script para k3s, aunque no es el objetivo de esta entrada:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

#!/bin/bash # ACTUALIZAR SISTEMA apt update apt upgrade -y # HOSTNAME hostnamectl set-hostname "kubernetes00.negu.local" hostnamectl set-hostname "kubernetes01.negu.local" hostnamectl set-hostname "kubernetes02.negu.local" echo '192.168.2.16 kubernetes00.negu.local kubernetes00' >> /etc/hosts echo '192.168.2.17 kubernetes01.negu.local kubernetes01' >> /etc/hosts echo '192.168.2.18 kubernetes02.negu.local kubernetes02' >> /etc/hosts # INSTALAR DEPENDENCIAS apt install --allow-change-held-packages -y apt-transport-https ca-certificates gpg software-properties-common curl ufw -y # DESHABILITAMOS SWAP swapoff -a sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab # INSTALAR K3S curl -sfL https://get.k3s.io | sh - systemctl enable k3s # CONFIGURAR FIREWALL MASTER systemctl start ufw systemctl enable ufw ufw allow 6443/tcp ufw allow 2379/tcp ufw allow 2380/tcp ufw allow 10250/tcp ufw allow 10251/tcp ufw allow 10252/tcp ufw allow 10255/tcp ufw reload # FIREWALL NODOS # ufw allow 10250/tcp # ufw allow 30000:32767/tcp # ufw reload # OPCIONAL DESHABILITAR FW # iptables -F # iptables -X # iptables -P INPUT ACCEPT # iptables -P OUTPUT ACCEPT # iptables -P FORWARD ACCEPT # PASOS POSTERIORES WORKER # EXTRAER K3S TOKEN DEL FICHERO # cat /var/lib/rancher/k3s/server/node-token # k3s_url="https://k3s-master:6443" # k3s_token="***TOKEN***" # curl -sfL https://get.k3s.io | K3S_URL=${k3s_url} K3S_TOKEN=${k3s_token} sh - |

Genero los directorios y los ficheros:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

root@TERRAFORM01:/TERRAFORM# mkdir KUBERNETES-CLUSTER root@TERRAFORM01:/TERRAFORM# mkdir KUBERNETES-CLUSTER/config root@TERRAFORM01:/TERRAFORM# mkdir KUBERNETES-CLUSTER/.ssh root@TERRAFORM01:/TERRAFORM# nano KUBERNETES-CLUSTER/.ssh/id_rsa0 root@TERRAFORM01:/TERRAFORM# nano KUBERNETES-CLUSTER/.ssh/id_rsa1 root@TERRAFORM01:/TERRAFORM# nano KUBERNETES-CLUSTER/.ssh/id_rsa2 root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# nano vars.tf root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# nano providers.tf root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# nano main.tf root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# nano config/script0.sh root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# cp config/script0.sh config/script1.sh root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# cp config/script0.sh config/script2.sh |

Lanzamos la instalación con Terraform, primero inicializamos:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# terraform init Initializing the backend... Initializing provider plugins... - Finding telmate/proxmox versions matching "2.9.14"... - Installing telmate/proxmox v2.9.14... - Installed telmate/proxmox v2.9.14 (self-signed, key ID A9EBBE091B35AFCE) Partner and community providers are signed by their developers. If you'd like to know more about provider signing, you can read about it here: https://www.terraform.io/docs/cli/plugins/signing.html Terraform has created a lock file .terraform.lock.hcl to record the provider selections it made above. Include this file in your version control repository so that Terraform can guarantee to make the same selections by default when you run "terraform init" in the future. Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary. |

Validamos:

|

1 2 |

root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# terraform validate Success! The configuration is valid. |

Y aplicamos:

|

1 |

root@TERRAFORM01:/TERRAFORM/KUBERNETES-CLUSTER# terraform apply |

Una vez creados los contenedores LXC…nos toca parametrizar Kubernetes que podéis usar este manual desde la configuración:

|

1 2 |

# ES POSIBLE QUE OS DE ERRORES LA INICIALIZACION, UTILIZAR ESTE COMANDO kubeadm init --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=all |

Si os da errores, es posible que necesitéis incluir estos parámetros en los .conf de los containers LXC (/etc/pve/lxc/):

|

1 2 3 4 5 6 7 |

config { raw.lxc = <<EOF lxc.apparmor.profile=unconfined lxc.cap.drop= lxc.cgroup.devices.allow=a lxc.mount.auto=proc:rw sys:rw EOF } |

¿Te ha gustado la entrada SÍGUENOS EN TWITTER O INVITANOS A UN CAFE?

Blog Virtualizacion Tu Blog de Virtualización en Español. Maquinas Virtuales (El Blog de Negu) en castellano. Blog informática vExpert Raul Unzue

Blog Virtualizacion Tu Blog de Virtualización en Español. Maquinas Virtuales (El Blog de Negu) en castellano. Blog informática vExpert Raul Unzue